Introduction

Robots are complex systems that are designed to perform complex tasks. Because the structure of robots cannot be clearly separated into hardware and software, the term 'middleware' is often used to describe the background of robotic and cybernetic systems.

The robot's complexity lies in the functions it is designed to perform. A robot must constantly monitor its surroundings in real-time and respond to changes in the environment. Sensor errors and noise must be eliminated. This robot must also perform tasks and respond to commands. Due to the expected complexity, the development of robot architecture was more akin to art than engineering for a long time.

1. Robot Evolution and Its Architectural Wonders

1.1 Shakey: The Pioneer

Shakey was possibly the first robot in the modern sense of the word. It was invented in the late 1960s at Stanford University. The robot's "brain" was the SDS-940 computer (later replaced by the PDP-10).

The software responsible for the robot's artificial intelligence contained over 300,000 36-bit words written in FORTRAN. Shakey was equipped with a camera, a range finder, and bump sensors, all of which were linked to a computer. Shakey's architecture was made up of three functional parts: environmental sensing, sequential action planning, and execution.

Later on, this concept will evolve into a sense-plan-act (SPA) paradigm. According to this paradigm, the robot must consistently: obtain data about the world from sensors (You can read about all the IoT sensors in the previous article), process it, create an internal reflection of the world from the obtained data, plan and execute the necessary actions. It became clear in the early 1980s that the SPA paradigm could not be implemented in practice. It would simply be too long between changes in the outside world and the robot's reaction. Engineers now frequently use the concept of "reaction time" when discussing robots. This parameter is measured in milliseconds or even microseconds for cutting-edge robots (for example, robotic quadcopters and cars in a dynamic environment).

However, for robots built on the SPA paradigm in the 1970s, this parameter could be tens or hundreds of seconds. It is also not about the computing performance of the computers at the time, but about the consistent architecture. The robot would be stuck waiting for the planning and response to the changes to be completed. It is obviously not the best way to deal with things in an era of rapid change.

1.2 Paradigm Shifts in the '80s

As a result, in the 1980s, researchers developed various paradigms, giving rise to robot evolution. The Subsumption Architecture was probably the most successful of these. A robot is built from layers of interacting finite state machines called behaviors, each of which connects sensors to actuators directly, according to the Subsumption Architecture. Behavior-based robots outperformed SPA robots in terms of efficiency and ability to respond to dynamic changes. However, the capabilities of these developments were quickly exhausted. Long-term goals and the general behavior of robots proved to be impossible to plan. The development of behavior-based robots has resulted in the emergence of layered architectures.

Multilayer architectures were made up of separate hardware and software components that were linked together by some features. Find out How We Do Software at Indeema. Layers could communicate with one another. The three-tiered architecture (3T) is the most common. Behavioral Control, the lower tier of architecture, is in charge of interacting with the outside world, including cameras, sensors, and motor controllers for the movement of the robot or its manipulators. Read more about Robot Operating System in our previous article.

The Executive, the second tier, supervises the tasks that the first tier must complete. The Executive layer is, in fact, a mediator, but it can also include specific algorithms to perform tasks. The third and highest level, Planning, is concerned with positioning, creating maps, and deciding on the appropriate actions.

In the late '80s and early '90s, the 3T architectural principle was very popular. It was used in the design of then-NASA robots, for example. The second tier was virtually absent in some versions of the 3T architecture, as its responsibilities were partly assigned to the Planning layer. Such architectures are sometimes referred to as two-tiered.

1.3 Syndicate Model and Beyond

The Syndicate model is an extension of the 3T architecture that allows for the coordination of multiple robots. In this architecture, each layer interacts not only with neighboring layers, but also with layers of other robots at the same level.

Modern concepts for robot software and architecture emerged in the late 1990s.

2. Standard Architecture of Modern Robots

Although it may appear trivial, robots are similar to humans.

Despite the variety and complexity of modern robots, most robots' basic hardware architecture is directly borrowed from nature. The brain of the robot is played by a simple computer. It can be built on either x86 or RISC platforms, depending on the task.

The robot's sensing components are linked to the computer. Cameras, microphones, sonars, radars, or LiDaRs, as well as local and global positioning modules, are examples of such components. These are the robot's "eyes", "ears", and other senses.

Nvidia Jetson, for example, is a specialized module that can be used for specific computations such as image processing. The brain computer is in charge of processing sensor data, creating a map of the world around it (as seen by a robot), and planning actions. All of this is due to specific software. In fact, it is a 3T architecture analogue of the Planning tier.

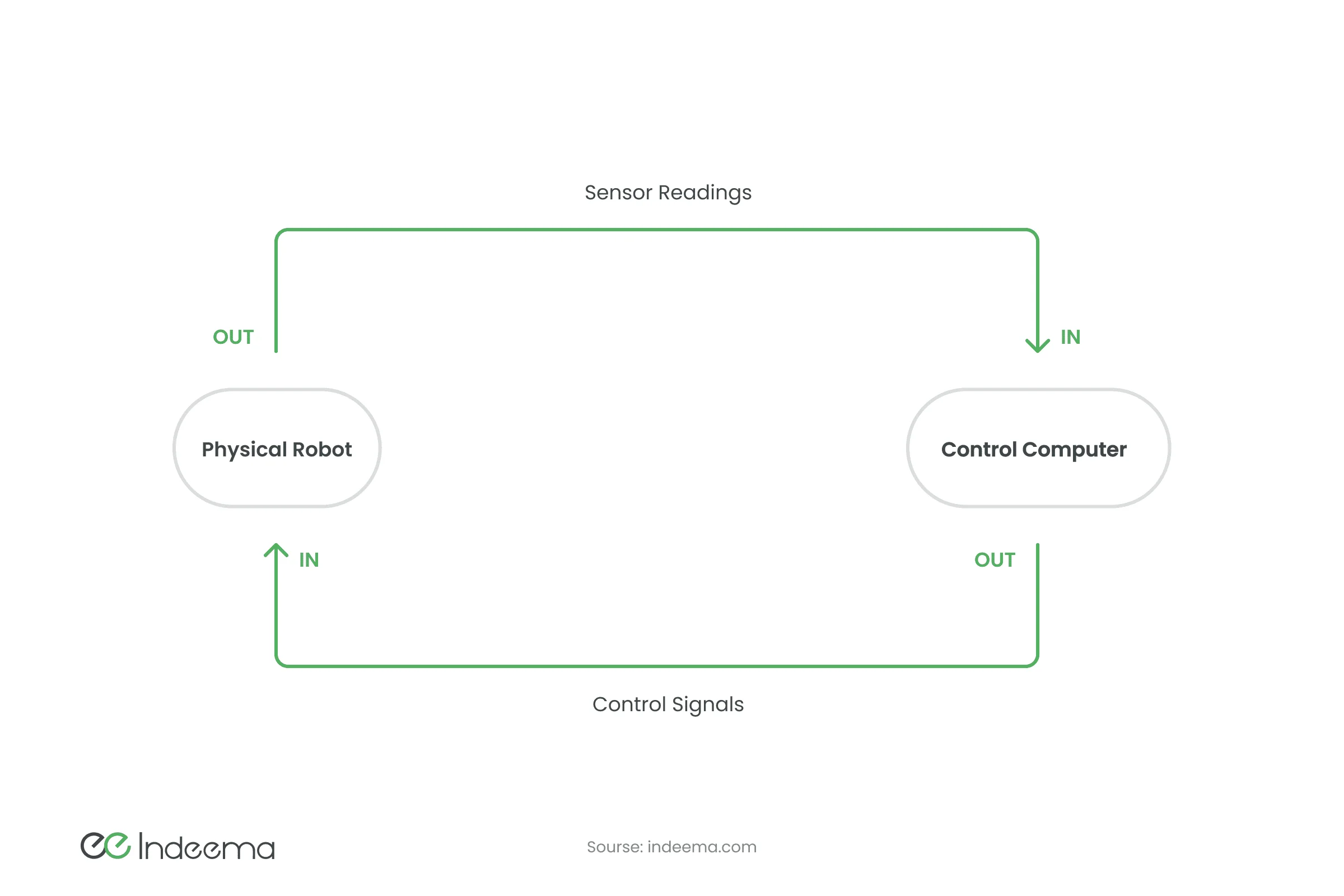

Interestingly, the main computer never directly controls the movements of the robot and its elements. Control is handled by a separate computer. It is in charge of interpreting and carrying out commands, detecting collisions, and processing feedback from moving parts.

The second computer is the "backbone," which controls the robot's "arms" and "legs." This division serves a purpose. If the central computer controls the robot's movements directly, the problems that plagued the first robots could resurface: unacceptably slow response to a dynamic environment.

Despite significant advances in computer performance, robots have also evolved. When it comes to responding to changes in the environment, the robot may simply be preoccupied with another task.

The second computer is analogous to the 3T model's Behavioral control tier. In most cases, it is based on a microcontroller that operates in an infinite loop while waiting for a command or message. This could be an Arduino-like board with dedicated firmware. Depending on its purpose, a robot can use multiple such boards.

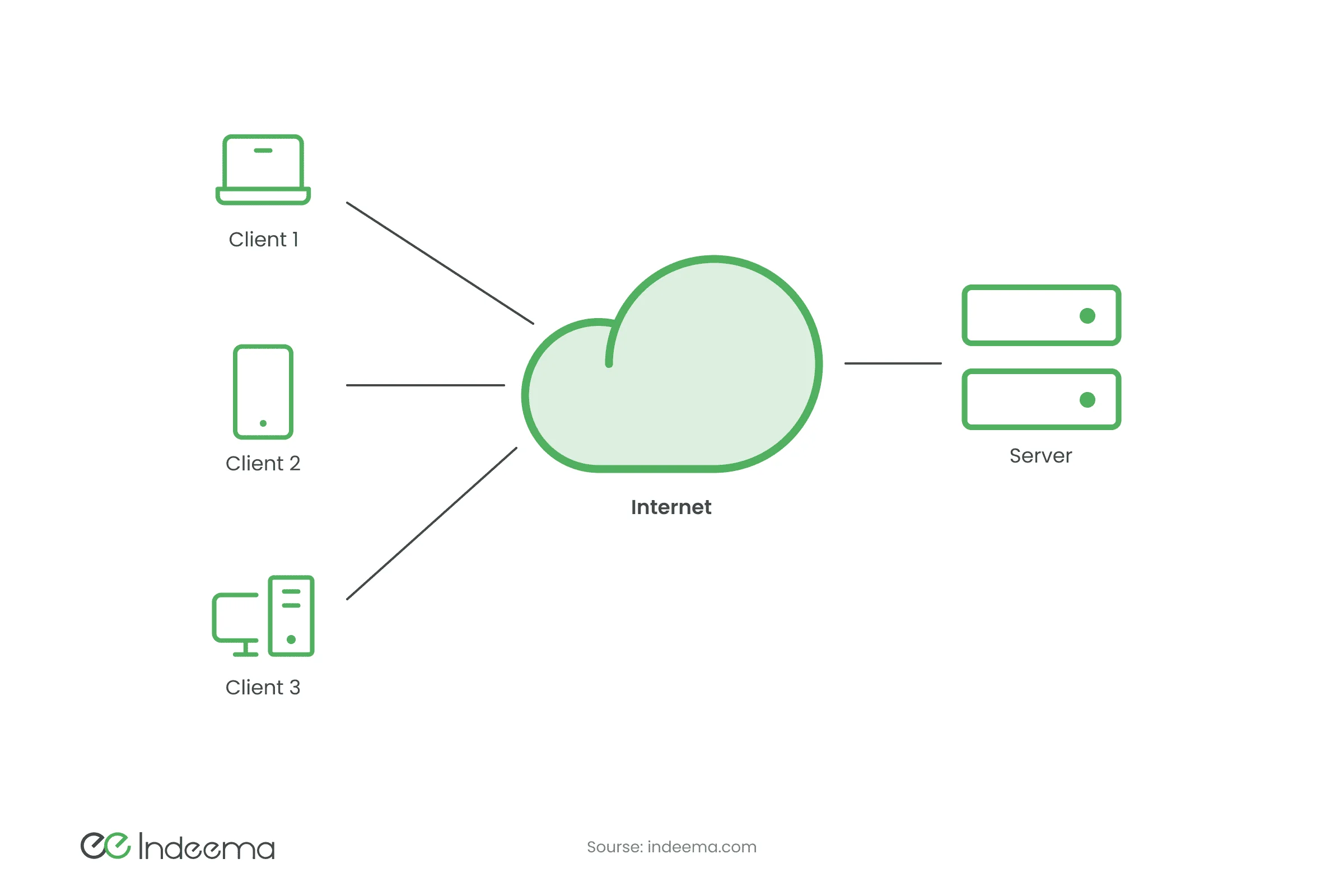

Because the software features of robots' central computers are similar to those of an operating system, robotic frameworks are frequently referred to as such (ROS, IKuka OS). The software enables the architecture's components to communicate with one another by exchanging data and sending commands. There are two basic communication approaches: client-server and publish-subscribe.

3. Communication Protocols and Middleware

3.1 Client-Server

Components communicate directly with each other in the client-server communication protocol (also known as point-to-point). One component (client) may, for example, invoke the functions and procedures of the other component (server). In robotics, client-server architecture components can be robot parts (such as a depth chamber with a built-in computer or a manipulator control) or individual robots that are integrated into one system to achieve a common goal.

3.2 Publish-Subscribe

The component publishes some information to the data bus in the publish-subscribe protocol (or so-called broadcast), and any other component can subscribe to this data. The centralized process, like operating systems, routes data between subscribers and publishers. In most cases, components publish data while also subscribing to data published by other components. For such messaging, the XML (extensible markup language) or JSON (JavaScript Object Notation) formats are frequently used. They can now send HTTP/HTTPS messages and integrate the system with Web-based applications.

Again, consider a camera that broadcasts a video stream to which object-recognizing components can subscribe. A control process that changes settings and turns the camera on and off must be subscribed to by the camera. The measured data from each sensor in a system must be published. Only one robot can use the Publish-Subscribe architecture. It must also use the Client-Server architecture to transfer data to other robots.

Most modern robot middleware implements communication between components using both approaches at the same time. This enables the development of a more flexible system and the simple integration of new modules and components, both software and hardware.

4. Robots with Specific Constructions

Outside of the standard, there are some rather strange and unusual robot designs, wich are also part of robot evolution. Often, these are robots performing a specific task or working in unusual conditions. It's worth thinking about at least some of them.

4.1 Soft Robots

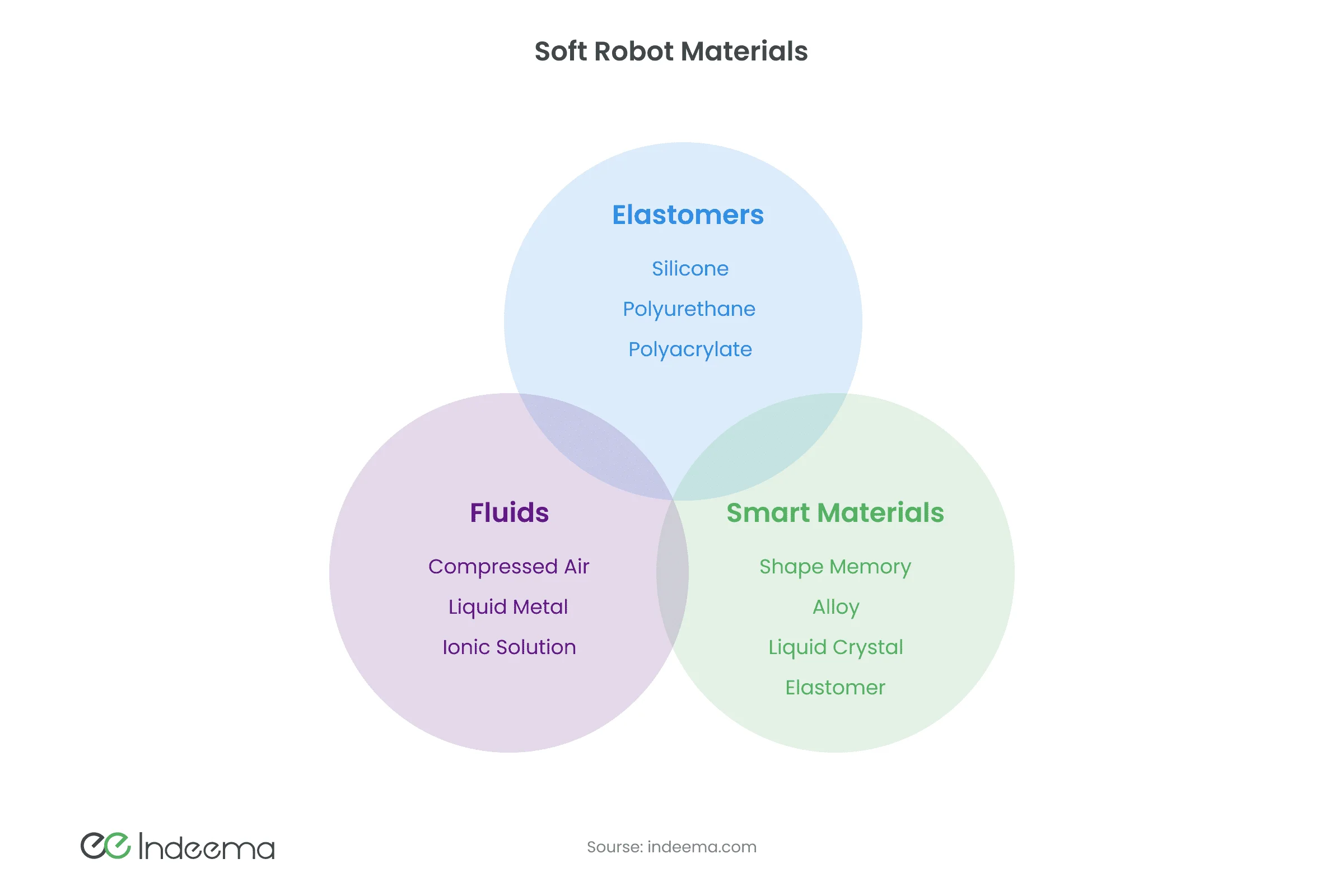

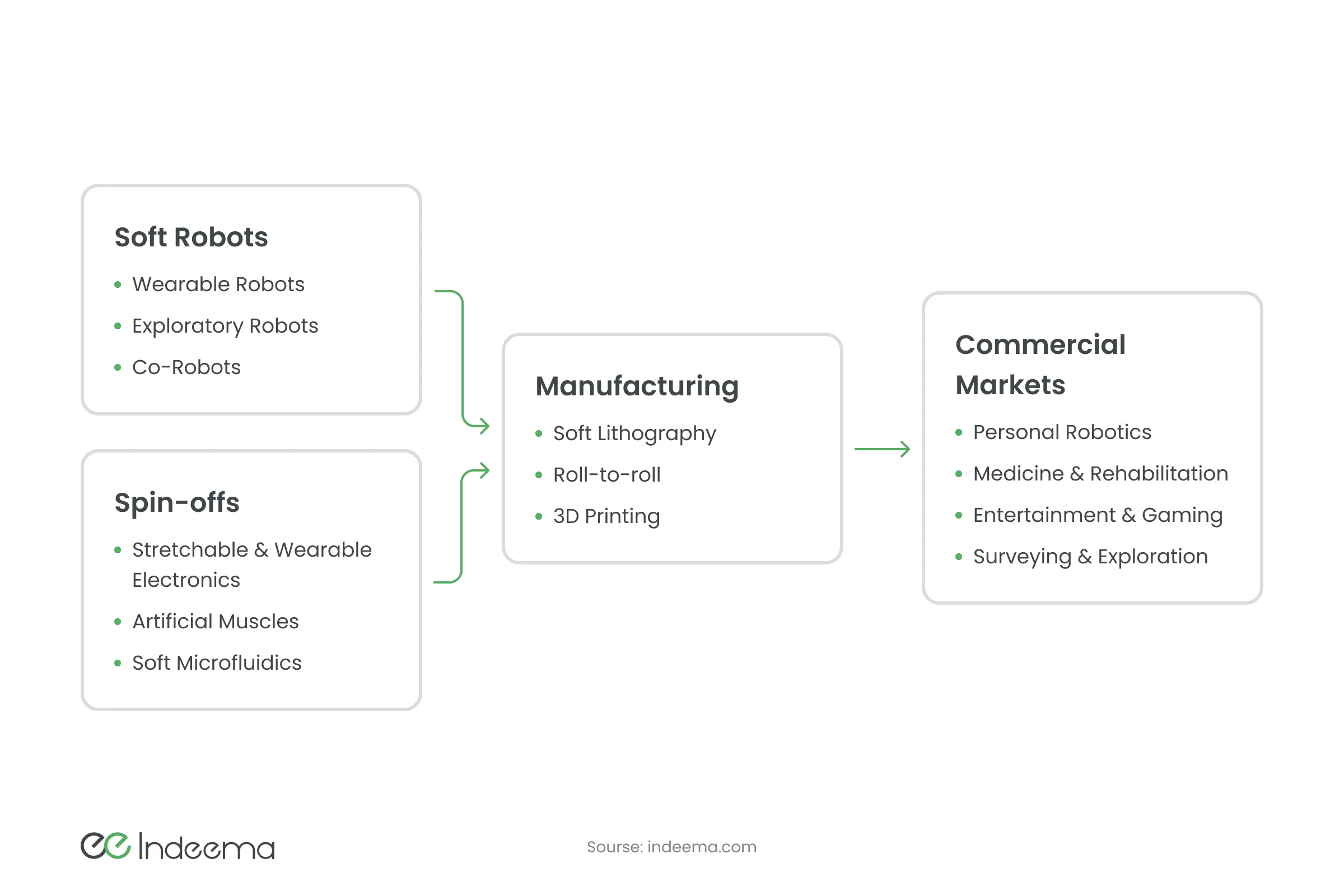

Soft robotics is an entire industry dedicated to the design of robots made from non-standard flexible materials. These robots are made up of non-permanently shaped materials that can stretch and wrinkle like living organisms. To manipulate fragile things and objects with a non-permanent form, soft materials are frequently used to make a manipulator or a gripper. However, there are some types of robots in which the base, frame, and majority of the parts are made of textile or plastic tubes filled with air or liquid.

Some of these robots may not even have electronic components and rely solely on pneumatics to perform tasks in hazardous environments. It is obvious that soft robots frequently have a completely unique, often non-trivial architecture. Soft robots are very promising because, in addition to the ability to work with fragile objects, they are very "people friendly" because they cannot hurt people by design, making them safer than other types. They can, for example, be used as an exoskeleton to assist in the rehabilitation of an injured person.

4.2 Nano- and Micro-Robots

Metal-polymer structures, such as DNA molecules, serve as the foundation for micro and nanorobots. Such robots can carry out pre-programmed tasks within the human body or another organism. Little can be said about architecture in general because it is so dependent on the task at hand.

However, robots are built using a set of tools, the most important of which is a software package for modeling the properties and behavior of nanorobots. Without this, developing and testing robots for specific tasks would take far too long. There should also be a conveyor for producing robots based on the templates obtained from the tested models. The resulting robots can, for example, deliver drugs to specific locations in the human body or isolate damaged organs.

So far, nano and microrobots have encountered numerous challenges: working with DNA is expensive (especially the first batch, which is then copied); robots made of other materials are cheaper, but they have less functionality and are much larger in size. The use of such robots in daily life is still a matter for the future.

4.3 Snakebot

The robot snake has a unique design (as well as architecture). It is made up of several (four or more) interchangeable modules connected in series. These robots mimic the movement of a snake. The robot's non-standard structure allows it to be used in complex environments, such as studying the human esophagus or intestine. Snake robots can move on complex surfaces, stairs, and climb trees all at the same time. There are also flying snake-like multicopter robot prototypes. They can manipulate objects in the air using their whole body.

Conclusions

Robots and their architecture are rapidly evolving. Perhaps tomorrow, a new approach or principle in robotics will change everything we know, and we will have to adapt to new realities. This could be a breakthrough in data processing, similar to the widespread use of neural networks, or it could be entirely new computers. However, modern concepts of robot architecture are adaptable enough to fully capitalize on this.

Related: IoT Solution Anatomy: A Deep Dive into Remote IoT Technology